On December 19, Timothy Lottes responded [1] to a Wccftech article [2] profiling graphics programmer Filippo Tarpini with a series of highly misleading claims about HDR. We break it down.

CLAIM: “The room environment is the primary thing that determines what the screen looks like.”

FACT CHECK: Largely true. You need both a capable HDR display and a suitable environment [3].

CLAIM: “Tone mapping is a marketing scam.”

FACT CHECK: False. Tone mapping is essential to adapt the luminance and color of the content to the limited capabilities of consumer displays:

“Each PQ grayscale level corresponds to a specific display light level, from 0 to 10,000 nits. The internal processing of each consumer display adjusts the highest PQ levels to fit

within the display’s brightness capability. This adjustment is called ‘tone mapping’ [4].”

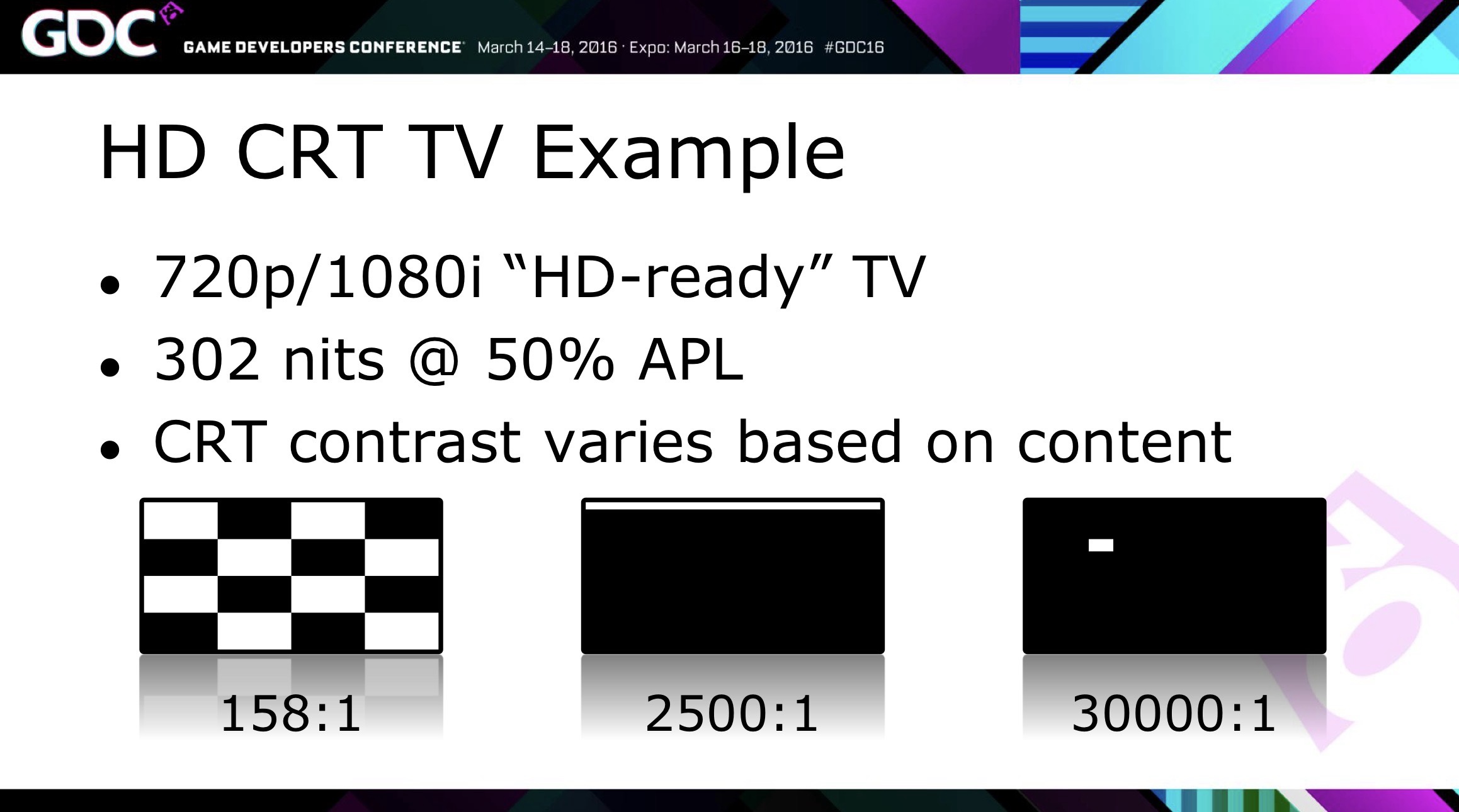

CLAIM: A Sony Wega CRT TV measured “over 14 stops of contrast at low APL. In a dark room, contrast is effectively like an HDR display.”

FACT CHECK: Misleading. “High dynamic range (HDR) is… commonly defined by contrast and peak luminance” (emphasis added) [5]. A display with only a ~300-nit peak is insufficient.

CRTs, including the high-end Sony WEGA models like the KD-34XBR960 (often considered one of the best CRTs ever made), use Cathode Ray Tube technology which operates within the constraints of Standard Dynamic Range.

CLAIM: “PQ is a horrible standard: it requires expensive shader code, the 10-bit requirement reduces FPS by 20%, it wastes digital code values on unused highlight luminance (10K nits) and shifts banding to the mid-range.”

FACT CHECK:

- The performance cost of pow (log/exp) operations in the shader is minimal on modern GPUs [6]. While developers typically target the most common GPUs used by gamers, Lottes targets “really low-end GPUs at extremely high frame rates [7].”

- The 10-bit requirement has a negligible impact on performance.

- Only ~7% of PQ’s code space is allocated to the 5,000-10,000 nit range [8].

- Moreover, visual tests show that even when limited to 1000 nits, PQ is more efficient than gamma, and the 10,000 nit PQ curve shows almost no visible loss compared to the 1,000 nit PQ curve, while covering 10 times the dynamic range [9].

- The PQ curve is perceptually uniform, prioritizing more codes for the lower luminances where the eye is more sensitive:

“Unlike the encoding used in SDR, PQ concentrates the grayscale levels where your vision is most sensitive. With PQ, High Dynamic Range provides not only more digital bits, but also the most efficient use of each bit [10].”

CLAIM: “Rec.2020 wastes code values when displays are < P3.”

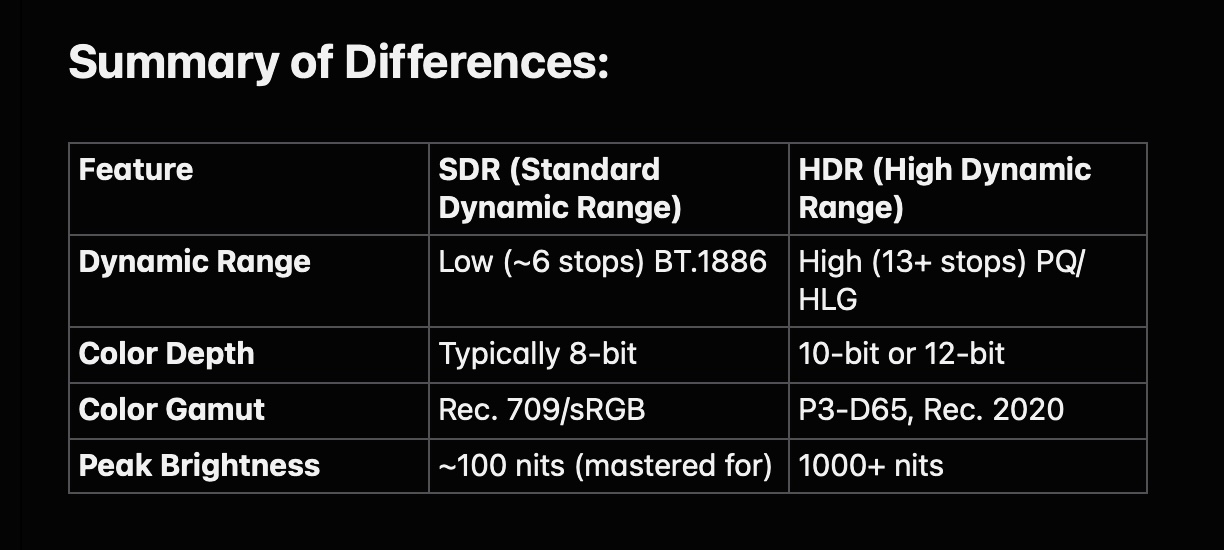

FACT CHECK: HDR requires 10/12 bits regardless of the gamut container.

CLAIM: “At three bits per pixel, banding is a lot worse with PQ.”

FACT CHECK: Invalid test. Lottes used a bit depth (3 bits) far below the HDR standard’s required minimum of 10/12 bits, guaranteeing severe banding.

If we apply Lottes’ own flawed logic-testing a curve way outside its designed range-research shows that extending BT.1886 to just 1000 nits- a fraction of PQ’s range-requires > 12 bits to avoid severe banding in dark scenes. Meanwhile, the 10,000-nit PQ curve maintains perceptual uniformity at 10 bits for the same content [11].

CLAIM: “The difference between “SDR” and “HDR” is all artificial marketing. It is quite literally just a OEM artificially limiting the capacity of the display contingent on using the worse non-display-relative HDR signal protocol.”

FACT CHECK: Counterfactual. First of all, SDR is based on obsolete technology, with standards like BT.1886 designed to mimic the limitations of CRT displays.

Secondly, manufacturers aren’t holding anything back. For instance, in independent testing, in SDR mode, a QD-OLED TV measured 1,024 cd/m² sustained 10% window and 376 cd/m² sustained 100% window [12], demonstrating that modern HDR displays in SDR mode can achieve brightness levels unheard of in any consumer CRT TV.

Takeaway: Technological breakthroughs developed for HDR have raised the peak brightness of displays leading to brighter SDR modes with improved contrast and black levels.

The fact that the Perceptual Quantizer (PQ) is an absolute standard is precisely what makes HDR superior for delivering high-quality, consistent visual experiences across various displays.

CLAIM: “An ‘SDR’ display with fantastic static contrast with a low APL scene in a dark room is going to look better than an HDR display in daylight that has less real world contrast due to reflection on the screen.”

FACT CHECK: Strawman argument. Misrepresents the capabilities and intended use case of the technologies involved.

Let’s play along though. Judged by his own criteria (“fantastic static contrast”), Lottes’ ‘14-stop’ Wega CRT TV failed miserably, which is why, rather than accept the poor ANSI contrast result (~ 7 stops), he chose to use the exponentially higher figure of the nonstandard sequential method instead.

CLAIM: “If claiming PQ is future ready implies that a gamma display-relative signal somehow isn’t ready, that would be silly, because legacy gamma display relative can target anything.”

FACT CHECK: False. Traditional gamma curves waste code values in highlights and starve the shadows. As ITU-R BT.2390 explains, “This inefficiency was not a serious problem with SDR systems… but when trying to represent HDR luminance ranges, an improved curve is required” [13].

CONCLUSION

Timothy Lottes’ criticisms of HDR rely on a recurring tactic: constructing tests that violate the standards he claims to evaluate (using 3-bit for a 10-bit standard), admitting to using “fake” data points, and targeting extreme, non-representative scenarios guaranteed to produce negative outcomes.

Footnotes

- Timothy Lottes, “Re HDR Filippo Tarpini”, Neokineogfx YouTube channel (Dec. 19, 2025)

- Alessio Palumbo, “The HDR Gaming Interview – Veteran Developer Explains Its Sad State and How He’s Coming to Its Rescue”, Wccftech (Dec. 2, 2025)

- In the YouTube video, Lottes admits to fudging data in his 2016 GDC presentation. Describing a key data point demonstrating how ambient light destroys contrast, he stated: “Now, I will note that this last one’s kind of a fake one… I did the computation as if I had a 400-nit screen, which is not exactly right.” This admission establishes a disturbing pattern of presenting misleading or invalid test methodologies.

- HDR10plus.org. Understanding the HDR10 Ecosystem. (2022)

- Kenneth Chen, Nathan Matsuda, Jon McElvain, Yang Zhao, Thomas Wan, Qi Sun, Alexandre Chapiro. 2025. What is HDR? Perceptual Impact of Luminance and Contrast in Immersive Displays. In SIGGRAPH ‘25: Proceedings of the Special Interest Group on Computer Graphics and Interactive Techniques Conference. ACM, New York, NY, USA.

- Filippo Tarpini, comment on “Re HDR Filippo Tarpini,” YouTube video. (Dec. 19, 2025). Tarpini states: “Sure it takes more pow (log/exp) operations in its shader, but on 2025 GPUs, these are completely irrelevant.”

- Timothy Lottes, comment on “Re HDR Filippo Tarpini,” YouTube video (Dec. 21, 2025). Gamers with “really low-end” GPUs don’t typically have “true” HDR displays.

- Source: HPA Tech Retreat 2014 – Day 5 report, Q&A on PQ efficiency.

- Scott Miller, Mahdi Nezamabadi, Scott Daly, “Perceptual Signal Coding for More Efficient Usage of Bit Codes”, SMPTE 2012. The paper concludes: “Though the 1K PQ curve had slightly more precision than the 10K PQ curve, the visible differences were very small given the drastic difference in peak output.”

- HDR10plus.org. Understanding the HDR10 Ecosystem. (2022)

- Scott Miller, et al., “Perceptual Signal Coding for More Efficient Usage of Bit Codes”, SMPTE 2012. Visual testing revealed that ITU-R Rec. BT.1886 gamma, when extended to a 1000-nit peak, required more than 12 bits to avoid visible banding in dark scenes (see “Dark Ramp” in Table 2, and JND Cross results for levels below 0.05 cd/m² in Table 1). In contrast, the 10,000-nit PQ curve achieved the same goal with 9-10 bits.

- Justin Gosselin, Adam Babcock & John Peroramas, “Samsung S95F OLED TV Review,” Rtngs.com (May 13, 2025)

- ITU-R BT.2390-12, Clause 5.2 “Design of the PQ non-linearity”